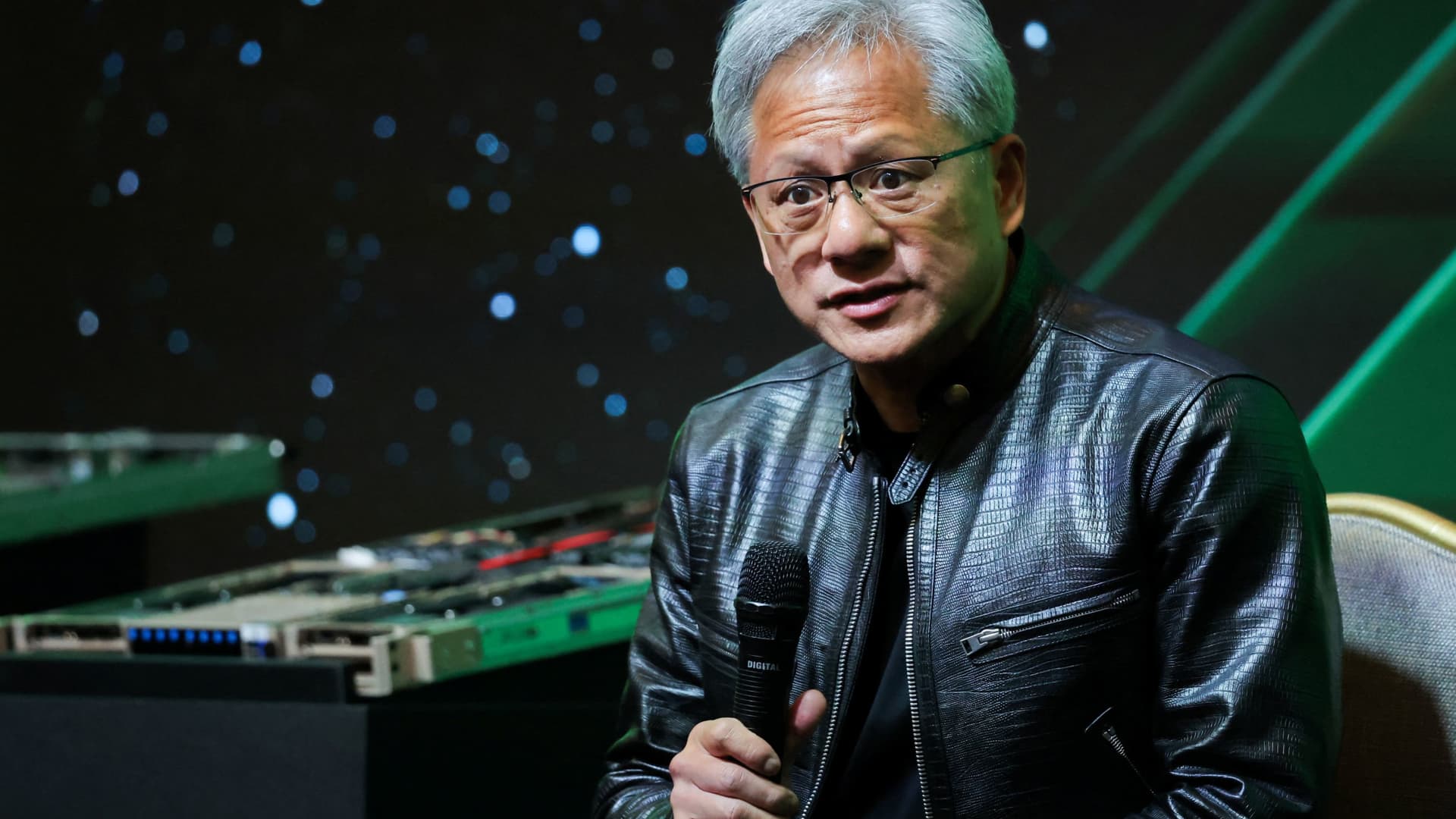

Prediction: Artificial intelligence (AI) stock Nvidia will struggle to maintain its trillion-dollar market cap through 2026

Fear of loss and other big investment trends go hand in hand. Unfortunately, these “ingredients” do not mix well in the long run. Since the Internet took off roughly 30 years ago, there hasn’t been a major technology, innovation, or other trend that has come close to competing with it… until now. The advent of … Read more